GenAI Weekly News Update 2024-07-22

News Update

Research Update

Meta released Llama 3.1, an open-source AI model with improved reasoning, multilingual support, and a larger context window. DeepMind's AlphaProof and AlphaGeometry 2 achieved silver-medal standards in the International Mathematical Olympiad. OpenAI introduced SearchGPT, a new AI search tool, while Mistral published a 123B model optimized for single H100 inference. Harvey, an AI startup, raised $100 million in Series C funding. Research on Generation Constraint Scaling showed that scaling the readout vector in models with explicit memory systems can significantly reduce hallucinations.

Model Update

Meta published Llama 3.1

Meta open-sourced its latest AI model, which matches the best private models like GPT-4 and Claude 3.5 Sonnet. The instruction-tuned model comes in three versions: 8B, 70B, and 405B. It offers better reasoning capabilities, a larger 128K token context window, and improved support for 8 languages, among other enhancements. The license has also been updated to allow developers to use the output of Llama models, including 405B, to improve other models.

Highlights in some technical report paper

Use straightforward, stable methods:

- Dense Transformer, No Mixture of Expert (MoE) in favor of stable training

- Post training only includes supervised fine-tuning (SFT), rejection sampling (RS), and direct preference optimization (DPO), avoiding the instability of more complex reinforcement learning(RL) algorithms.

Use synthetic data:

- For coding: They use execution feedback, programming language translation and back-translation approach for code explanation, generation, documentation, and debugging

- For multilingual capabilities: They generated synthetic data for speech translation using the NLLB toolkit to create translations in low-resource languages.

- For math and reasoning: They used Llama 3 to generate step-by-step solutions for math problems, which were then filtered for correctness.

- For long context: They generated synthetic question-answer pairs for long documents by splitting them into chunks and using an earlier version of Llama 3 to generate QA pairs for those chunks.

- For tool use: They produced synthetic data for single-step and multi-step tool use scenarios, including prompts that require tool calls and appropriate responses.

- For safety and alignment: They applied techniques like Rainbow Teaming to generate diverse adversarial prompts for safety testing and synthetic preference pairs by using text-only LLMs to edit and introduce errors in supervised fine-tuning data.

Model Evaluation

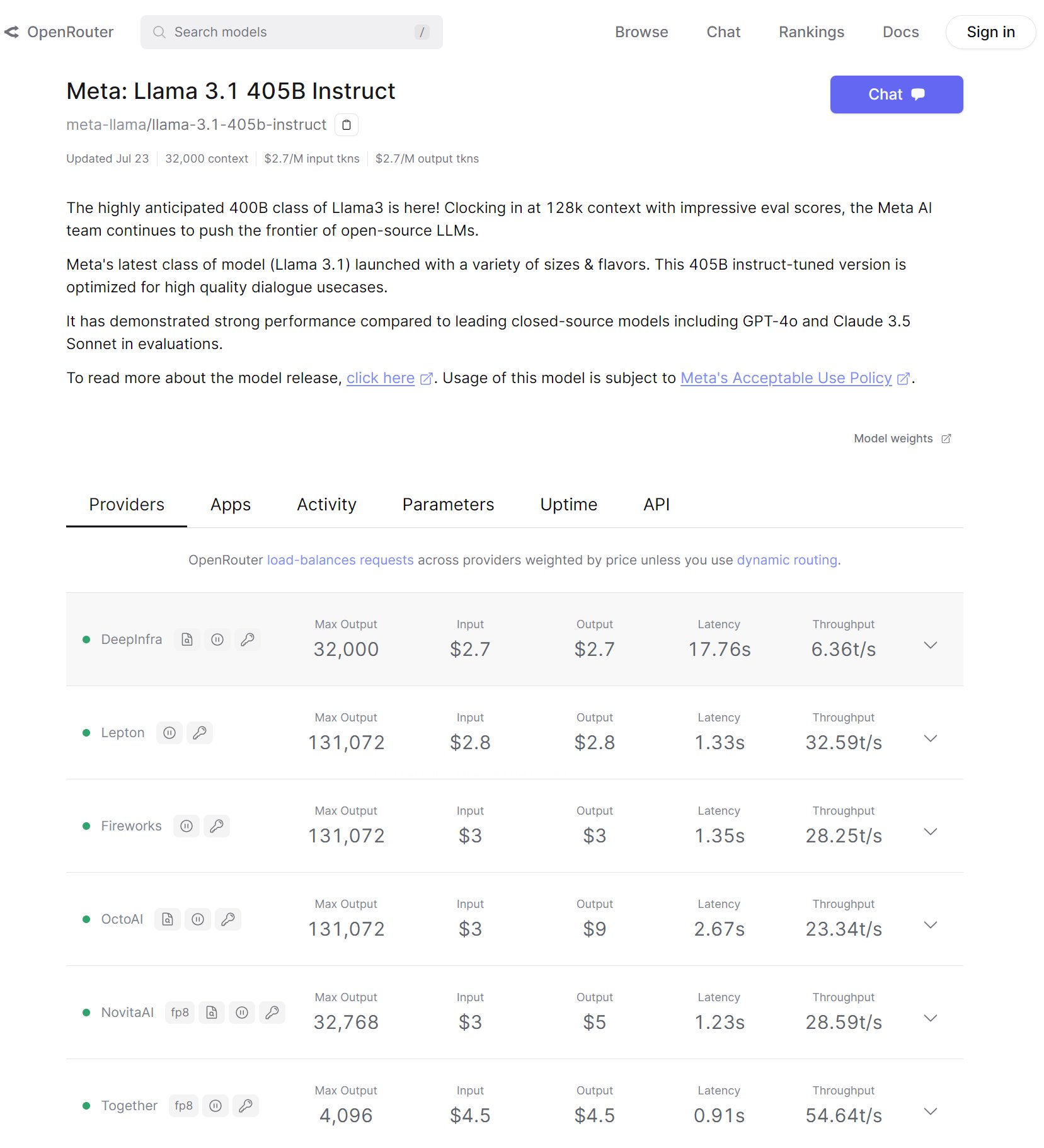

Pricing

There is an interesting discussion on X on how Lepton AI reaches such a low price.

Deepmind announced AI achieves silver-medal standard in IMO

DeepMind presents AlphaProof, a new reinforcement-learning-based system for formal math reasoning, and AlphaGeometry 2, an improved version of our geometry-solving system. Together, these systems solved four out of six problems from this year’s International Mathematical Olympiad (IMO), achieving the same level as a silver medalist in the competition for the first time.

It is definitely not yet comparable to human IMO participant — there is still human in the loop — the problem needs manual translation into formal mathematical language for the system to understand. And the time spent on each problem is quite different, for most questions, it takes 2 to 3 days comparable to 4.5 hrs competition for all problems. But it is still notable great work and extend the frontiers for mathematical reasoning.

As noted by Terence Tao

- AI milestone: DeepMind's tools solved 4/6 IMO problems, advancing AI capabilities in geometry and formalized mathematical problems.

- Formal math benefits: Could improve automation in formal mathematics; generated proof database may be valuable if shared.

- Novel approach: Uses reinforcement learning and formal methods, expanding AI problem-solving tools without mimicking human intelligence.

OpenAI announced SearchGPT

OpenAI demos a new AI search experience that aims to compete directly with Google and Perplexity. The prototype is currently available only to a small group of test users.

Early feedback from X indicates that the experience is fast and optimized for common use cases like stock prices and weather. The demo also demonstrates the capability to combine charts, text, and links on one page.

Mistral published 123B model suitable for single H100 inference

The model is significantly more capable in code generation, math, and reasoning compared to previous models. Some highlights include:

- Fine-tuned to reduce hallucinations and acknowledge when it cannot find solutions or lacks sufficient information to provide a confident answer.

- Large improvement in instruction-following and conversational capabilities.

- Supports 13 languages.

- Enhanced function calling and retrieval skills.

- Released under the research license.

AI Company Update

Harvey announced $100m Series C at $1.5B valuation

Harvey, an AI startup based in San Francisco, secured a $100 million Series C funding round. GV led the investment, with participation from OpenAI, Kleiner Perkins, and Sequoia Capital, valuing the company at $1.5 billion. This round was slightly lower than the rumored $600 million at a $2 billion valuation the company reportedly sought. As previous reported by the information, Harvey intended to use the new round to fund acquisition of legal research tool vLex. The company does generate revenue now. In December, it hit $10 million in annual recurring revenue, or the subscription revenue it expected to generate over the next 12 months, compared to less than $2 million in April 2023.

Research Update

Generation Constraint Scaling Can Mitigate Hallucination

The paper is base recent research showing that given the relation between memory and hallucination in psychology Berberette et al. (2024), it is believed that LLMs with explicit memory mechanism will help lowering hallucination.

The authors use a model called Larimar, which has an external memory system. They found that by simply scaling up the "readout vector" (which helps control what the model generates) by a factor of 3 to 4, they could significantly reduce hallucinations.

The researchers tested their method on a dataset of Wikipedia-like biographies and compared it to an existing technique called GRACE. Their approach performed better than GRACE, improving the accuracy of generated text by about 47%. It was also much faster, generating text 10-100 times quicker than GRACE.

The key advantages of this method are:

- It's simple - just multiplying a vector by a number.

- It doesn't require any additional training of the model.

- It works well and is very fast.

However, this technique only works for language models that have explicit memory mechanisms like Larimar. The authors suggest that this approach opens up new possibilities for improving language models with memory systems.