GenAI Weekly News Update 2024-08-19

News Update

Research Update

Ideogram 2.0, the latest version of the AI image generator, introduces several significant upgrades that position it as a strong competitor to established tools like Midjourney and DALL-E 3. A21 Labs, a leading AI research lab, has also launched Jamba 1.5, a new model family that offers improved performance and efficiency. A16z has released a Top 100 Generative AI Consumer Apps report, highlighting trends and emerging categories in AI-first web and mobile products.

AI Product Launch

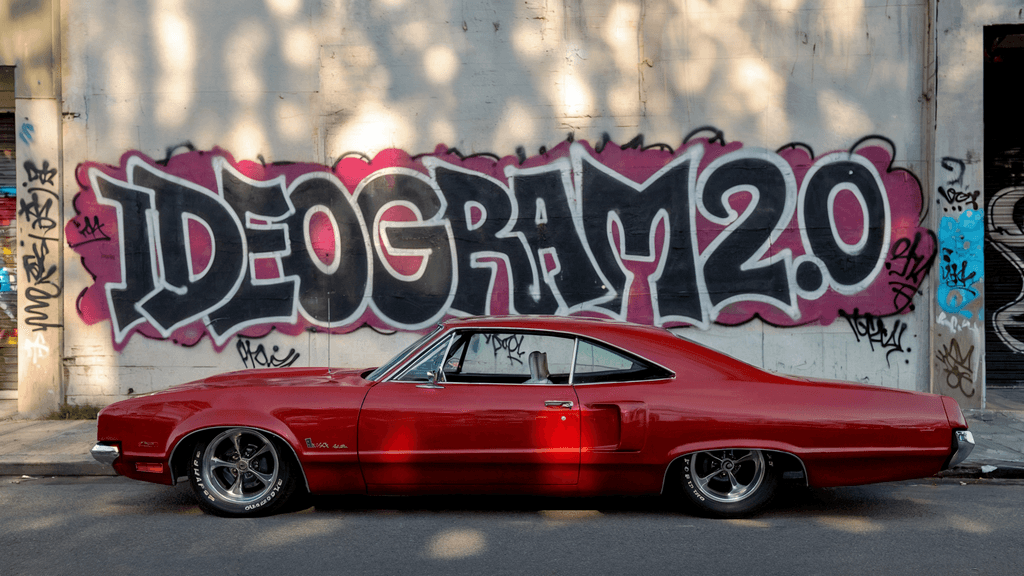

Ideogram launched Ideogram 2.0

Ideogram 2.0, the latest version of the AI image generator, introduces several significant upgrades that position it as a strong competitor to established tools like Midjourney and DALL-E 3. One of the key improvements is in text rendering, which has historically been a challenge for AI image generators. Ideogram 2.0 effectively addresses this with enhanced text accuracy, making it ideal for creating graphic designs, posters, and other visual content where text clarity is crucial.

The update also includes new features like customizable color palettes, which allow users to maintain brand consistency across digital assets, and the introduction of five unique themes that provide various stylistic options. Additionally, the realism in image generation, particularly for eyes, faces, and fingers, has been significantly improved, bringing the AI-generated images closer to photorealistic standards.

Ideogram 2.0 is now available on iOS, and the company has launched a beta API, enabling developers to integrate the technology into their applications. This version is expected to democratize access to high-quality visual content creation, making it more accessible to a wider audience, including small businesses and individual creators.

These enhancements, combined with competitive pricing, make Ideogram 2.0 a compelling choice for anyone in need of AI-generated imagery, from marketing professionals to independent artists.

For more details, you can explore the full article on AlternativeTo(AlternativeTo).

AI21 Lab Launched Jamba 1.5

AI21 Labs recently launched Jamba 1.5, a significant update to their AI model family, introducing two versions: Jamba 1.5 Mini and Jamba 1.5 Large. These models are built on a hybrid architecture that combines the strengths of Transformers with the efficiency of the Mamba architecture, which allows them to handle extensive context windows more effectively than other models in the market.

Key highlights of Jamba 1.5 include the ability to process up to 256k tokens in a single context window, making it the model with the longest context capacity available. This capability is particularly beneficial for complex, data-heavy tasks such as retrieval-augmented generation (RAG) and agentic AI workflows, where maintaining context over long sequences is critical.

The Jamba models also excel in speed and efficiency, with Jamba 1.5 Mini and Large offering faster inference times compared to similar models. This makes them highly suitable for enterprise applications that require rapid processing of large amounts of data. Additionally, these models support a wide range of languages, including English, Spanish, French, and others, further enhancing their versatility for global applications.

Jamba 1.5 is designed with developer-friendly features like native support for structured JSON output, function calling, and citation generation. The models are available for deployment on major cloud platforms, including AWS, Google Cloud, and Microsoft Azure, and are also accessible via Hugging Face.

Overall, Jamba 1.5 represents a major step forward in AI model development, particularly for applications requiring extensive context handling and high-speed processing(SiliconANGLE,AIwire,AI21 Labs).

AI Product Update

A16Z launched new Top 100 Ai consumer product list

Andreessen Horowitz (a16z) has released the third edition of their Top 100 Generative AI Consumer Apps report, highlighting trends and emerging categories in AI-first web and mobile products. The report shows a significant rise in creative tools, with over half of the top apps focused on content generation, including new entrants in video and music. ChatGPT remains the top-ranked product, but competition is growing with other AI-powered assistants like Perplexity and Claude making strong gains. Bytedance also made a notable entry with several AI-based apps.

For more details, visit a16z.

AI Research Update

PEDAL: Enhancing Greedy Decoding with Large Language Models using Diverse Exemplars

The paper introduces a novel approach to improving text generation in Large Language Models (LLMs). The main focus of the paper is on a technique called PEDAL, which combines diverse exemplars in prompts with self-ensembling strategies to improve the accuracy and efficiency of text generation.

Traditionally, self-ensembling methods like Self-Consistency have shown great accuracy by generating multiple reasoning paths and aggregating them to produce a final output. However, these methods typically require generating a large number of tokens, which increases the inference cost. On the other hand, Greedy Decoding is much faster but often less accurate.

PEDAL aims to bridge the gap between these two methods by using diverse exemplar-based prompts that induce variation in the LLM outputs, which are then aggregated using the LLM itself. This approach not only improves accuracy but also reduces inference costs compared to traditional self-consistency methods.

Experiments conducted on datasets like SVAMP and ARC show that PEDAL outperforms Greedy Decoding-based strategies while maintaining a lower inference cost than Self-Consistency-based approaches.

LLM Pruning and Distillation in Practice: The Minitron Approach

The paper explores the compression of large language models (LLMs) by reducing their size while maintaining performance. Specifically, the study focuses on compressing the Llama 3.1 8B and Mistral NeMo 12B models down to 4B and 8B parameters using two primary strategies: depth pruning and joint hidden/attention/MLP (width) pruning.

The authors applied these techniques to develop two compressed models: a 4B model derived from Llama 3.1 8B and a state-of-the-art 8B model from Mistral NeMo 12B, referred to as Mistral-NeMo-Minitron-8B. They evaluated these models on common benchmarks using the LM Evaluation Harness and found that slight fine-tuning of the teacher models on the distillation dataset was beneficial, especially in the absence of access to the original data.

The research demonstrates that these compression techniques can produce models with competitive performance, and the authors have open-sourced the base model weights on Hugging Face under a permissive license, contributing to the broader research community.