GenAI Weekly News Update 2024-10-08

News Update

Research Update

Cursor led the SaaS market with significant growth in AI-powered coding tools. The Cursor team discussed their innovations in speculative edits and attention mechanisms in an interview with Lex Fridman. Anthropic launched a Message Batches API to enhance efficiency in handling large-scale operations. The research paper "Thinking LLMs" introduced thought generation to improve reasoning in AI. Another paper, "LLMs Know More Than They Show", explored internal error detection mechanisms in AI models. Dario Amodei highlighted AI's potential for societal transformation in his essay, "Machines of Loving Grace".

AI Company Update

Cursor Makes Significant Progress in October 2024 SaaS Market

Cursor, the AI-assisted coding tool, has surged to the top of the SaaS market in October, leading in new customer acquisitions and card spending. This notable growth reflects the increasing reliance on AI-powered developer tools that streamline coding tasks and enhance productivity. Cursor's rise highlights the continued evolution of AI within the software development sector.

In addition to Cursor, companies like OpenAI, Canva, and Adobe have maintained strong positions, while Mailchimp is experiencing growth due to early Black Friday preparations. The overall SaaS market continues to evolve with a focus on AI-driven innovation.

Cursor Team: Future of Programming with AI

In a recent interview with Lex Fridman, the founding members of the Cursor team, Michael Truell, Swall Oif Arvid Lunark, and Aman Sanger, discussed the role of AI in programming and the future of human-AI collaboration in designing and engineering complex systems.

There are some technical details that worth highlight:

- Model Architecture: Custom model ensemble, integrating Mixture of Experts (MoE) for long-context inputs.

- Speculative Edits: Introduced Speculative Edits to improve code editing, speeding up large-scale code processing. Traditional speculative decoding uses a small model to predict draft tokens, which are then verified by a larger model. Speculative Edits leverage the original code as a strong prior and process large blocks of code in parallel. This method significantly accelerates the code-editing process while maintaining high-quality output.

Here is an example

Detail can be find on fireworks.ai website

- Caching Strategies: Optimized KV caching for faster, more efficient code execution across multiple requests.

- Attention Mechanism Optimization: Using Group Query Attention for memory efficiency and better compression.

- System Enhancements: Custom code retrieval, dynamic prompt formatting, and synthetic data generation for improved performance.

Machines of Loving Grace by Anthropic CEO Dario Amodei

Dario Amodei, CEO of Anthropic, presents a forward-looking perspective on the potential for AI to transform society for the better in his essay, "Machines of Loving Grace" (October 2024). Despite often discussing the risks of AI, Amodei emphasizes that AI's upside is being underestimated, particularly in fields like biology and neuroscience. He envisions AI driving breakthroughs in curing diseases, enhancing mental health, and addressing global poverty.

Amodei highlights how AI, when responsibly managed, could accelerate progress in these areas, improving global welfare. However, he remains cautious about the risks and stresses the importance of careful oversight to prevent AI from causing harm. In his view, addressing these risks is what stands between humanity and a radically positive future shaped by AI.

Ultimately, Amodei’s essay calls for a balanced discussion, acknowledging AI’s immense potential while ensuring that its deployment is aligned with societal needs and ethical considerations.

AI Product Update

Anthropic Launched Message Batches API

Anthropic has introduced a new Message Batches API feature, allowing users to send multiple messages in a single API call, improving efficiency and reducing overhead for users handling large-scale operations. This update is particularly useful for applications with high message throughput, enabling better performance and streamlined processing.

The feature also supports batched responses, which can reduce latency and deliver responses faster in real-time use cases. This aligns with Anthropic’s goals of enhancing user experiences with scalable and efficient AI tools.

AI Research Update

Thinking LLMs: General Instruction Following with Thought Generation

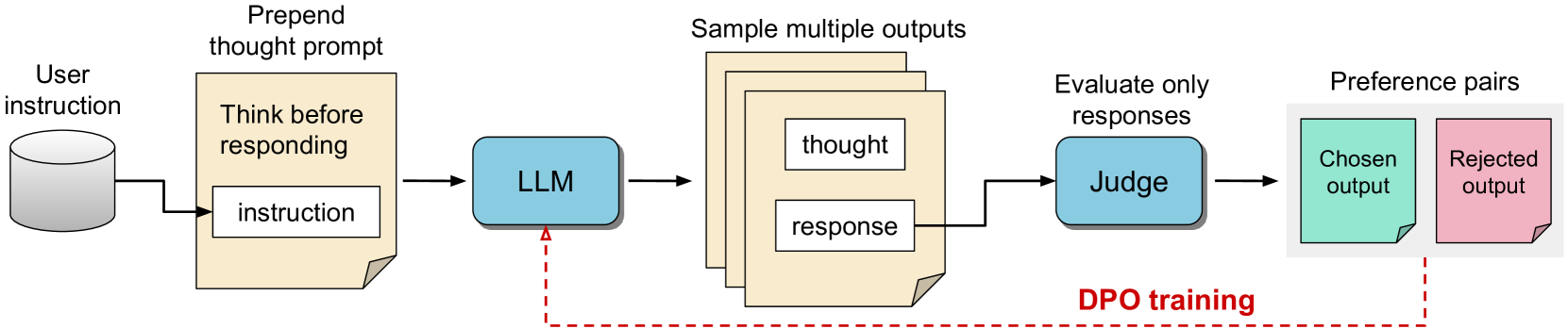

- Motivation: Current LLMs lack the ability to think explicitly before responding, which is crucial for tasks involving reasoning and planning. Although methods like Chain-of-Thought (CoT) improve reasoning tasks, they are limited in scope, mainly benefiting logic and math problems.

- Thought Preference Optimization (TPO): The paper introduces a method for training LLMs to generate internal thoughts before responding to any instruction, without requiring additional human-annotated data. This method employs an iterative search-and-optimize process.:

- The model generates multiple thought candidates for a given instruction.

- A judge model evaluates the quality of responses to optimize both the thought process and the final output.

- Unlike prior approaches, this process does not rely on direct supervision or thought-level feedback.

- Key Techniques:

- Reinforcement Learning from AI Feedback (RLAIF): The thought generation process is optimized iteratively using preference pairs that are evaluated by the response-only judge model.

- Length-Control: A mechanism is used to prevent the generated thoughts from becoming excessively verbose.

- Results: AlpacaEval and Arena-Hard Benchmarks: TPO achieves a win rate of 52.5% on AlpacaEval and 37.3% on Arena-Hard, outperforming non-thinking LLM baselines.

LLMs Know More Than They Show: On the Intrinsic Representation of LLM Hallucinations

- Motivation: Understanding how truthfulness is encoded within LLMs can lead to better detection and mitigation of errors, moving beyond user-centric evaluations toward model-centric analyses. Understand whether the internal representation can reduce hallucinations.

- Error Detection through Internal States: The paper introduces an approach to detect LLM errors by probing the internal states of the model, specifically focusing on exact answer tokens.

- Classifiers on Intermediate Representations: Classifiers are trained on internal activations to predict error likelihood and types. The model encodes signals of truthfulness that can be leveraged for enhanced error detection.

- Multifaceted Truthfulness: The research demonstrates that truthfulness is not encoded uniformly across tasks. Instead, it varies depending on the nature of the task (e.g., factual retrieval, sentiment analysis), revealing that LLMs have task-specific truthfulness mechanisms rather than universal ones.

- Improved Error Detection: Error detection improves significantly by focusing on exact answer tokens, offering insights into how truthfulness is encoded during LLM text generation.